Security, Privacy & AI Ethics at WorkVis.io

WorkVis.io is committed to AI security, privacy, ethics, and best practices. This commitment includes protecting the security of your company's data and the privacy of your workers. Where we employ Artificial Intelligence (AI) in our solutions, it is done responsibly and for the benefit of workers through the prevention of workplace injuries. Our customers play an integral role in ensuring our technology is used to elevate their safety culture and create an environment of trust.

Security

WorkVis operates under an Information Security Management Program (ISMP) which defines our security framework and governs the extensive security measures we employ. Our ISMP has achieved SOC2 compliance through independent evaluation under AICPA (American Institute of Certified Public Accountants) Trust Services Criteria (TSC). These criteria include security, availability, processing integrity, confidentiality, and privacy. Our evaluation is updated annually. Through our ISMP, we strive to maintain a secure information technology environment that protects the data and systems of WorkVis and our customers and ensure that our systems run reliably and satisfy customers’ requirements. Current and prospective customers may request more information on our SOC2 report by contacting sales@workvis.io.

Our practices include:

- Encryption of data in transit and at rest

- Network protection and monitoring

- Principle of least privilege

- Role-based access control

- Change management

- System hardening

- Disaster recovery and incident response planning

- Logging and intrusion detection

Privacy

At WorkVis.io we take your workers' privacy seriously. Our solutions do not utilize technologies intended to identify specific people (e.g., facial recognition). Where faces or other identifying features may be captured by cameras and processed by our systems, WorkVis offers customers features including face-blurring and text/license plate blurring to protect their workers’ privacy.

Please view our Website Privacy Policy here: https://www.workvis.io/privacy-policy/

View our App Privacy Policy here: https://api.workvis.io/production/privacy

AI Ethics

Our Commitment to Ethical AI

At WorkVis.io, we are committed to developing and using artificial intelligence responsibly. We follow established best practices for AI ethics, guided by principles of fairness, transparency, privacy, and accountability. We follow the Business Software Alliance (BSA) framework to build trust in AI.

Our approach includes:

- Fairness: We actively work to minimize bias in our AI systems and ensure they serve all users equitably.

- Transparency: We strive to make our AI-driven decisions understandable and provide clear information about how our systems operate.

- Privacy & Security: We prioritize the protection of user data, employing strong safeguards and respecting all applicable privacy regulations.

- Accountability: Our team regularly reviews our AI systems for ethical compliance and impact, and we welcome feedback to continuously improve.

- AI Risk Management: We follow a process to ensure that our system is trustworthy by design.

We believe that ethical AI isn't just good practice, it's essential to building trust and delivering long-term value to our users and communities.

European Union (EU) Artificial Intelligence Act

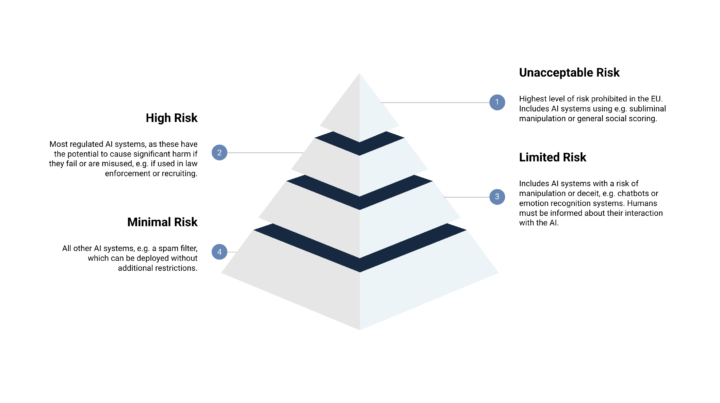

The EU AI Act is the first comprehensive AI regulation that governs how AI models are developed and deployed in the EU. A number of other nations/states are also looking at this as a framework for their regions. The EU Act classifies AI applications based on the risk to the public.

WorkVis.io is a Provider under the EU AI Act. The WorkVis solutions fall under the Limited Risk category and meet the compliance requirements of it. Read more about the act at https://artificialintelligenceact.eu/.

Customer Best Practices

In the ongoing drive towards zero incidents, technology has become an indispensable tool in keeping workers safe in the workplace. As the adoption of technology continues at an ever-increasing pace, concerns about privacy and surveillance have become increasingly loud – and for good reason.

However, when approached with transparency, responsibility, and a genuine commitment to safety, AI-powered cameras can be viewed as a way to keep workers safe, rather than a way to spy on workers or to punish them.

To develop an environment of trust, we recommend our customers follow these guidelines:

- Establish a Safety Culture

- Clearly Articulate Safety Objectives

- Establish Expected Best Practices for AI Camera Usage

- Limit Camera Usage to Safety Purposes Only

- Encourage Union and Employee Collaboration for Compliance and Monitoring

- Use Incidents for Teaching not Punitive Measures

Safety-based AI cameras are a valuable and indispensable tool in our journey to a zero-incident world. When approached with clear communication, specific and targeted monitoring, and collaboration with unions, these tools can be seen by employees as a means to help keep them safe, rather than a way to track or punish them. In doing so, companies can foster a culture where productivity, worker safety, and well-being coexist harmoniously.

To read more about customer best practices, read our blog on the topic here.